DevOps, which has transformed the software development process in line with agile practices, can be effectively leveraged in ML as MLOps, making it a strategic choice for advanced machine learning applications.

In this article, we explore the importance of data as a differentiator in ML and how the DevOps approach can benefit ML projects.

Let’s start with a question – can DevOps practices help improve machine learning?

You can also read this article republished on our Medium.

DevOps vs. MLOps

You might be familiar with DevOps.

DevOps is a set of tools and practices that automates and integrates the processes between software development and operational teams in order to shorten the development life cycle.

On the other hand, Machine Learning DevOps (MLOps) is like a subset of DevOps. MLOps specializes in machine learning applications and projects. Though MLOps and DevOps may sound similar, a deep dive is required to understand the difference.

Today, organizations are harnessing the power of data and moving to data-driven organizational culture. This mode of using data makes a big difference between DevOps and MLOps.

MLOps emphasizes the process of locating relevant data and training the algorithm on these data sets to return accurate predictions. If the correct data sets are unavailable, one cannot train the algorithm to deliver the required results leading to a futile exercise in further development and operations in machine learning.

3-Step Process for Utilizing DevOps in ML

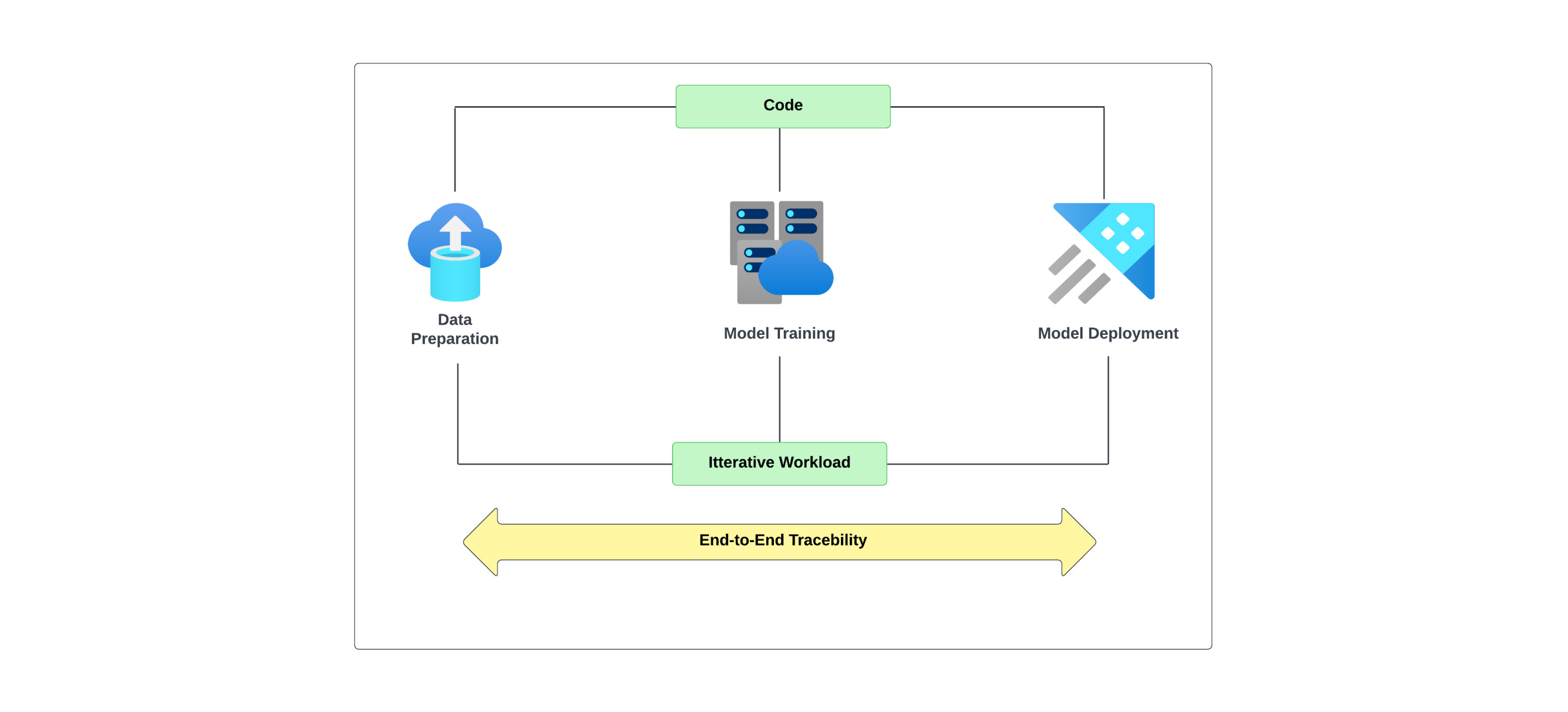

Machine learning is comprised of various steps, from data preparation to model training and deployment.

Here we will provide a high-level overview of the process.

Step 1: Data Preparation

The first step in machine learning is data preparation.

Data quality can make a difference when it comes to getting accurate or flawed results. “Garbage-in, garbage-out” from the data perspective has plagued analytics and decision-making for generations.

Using poor quality data impacts the machine learning workload in two ways – first, poor quality data usage in the historical data to train the ML model and, secondly, in the new data that the model will use to make future decisions. Therefore, it is essential to have relevant good-quality data in the required formats.

Step 2: Model Training

The second step in machine learning is to train the models or model training.

Here, you choose a suitable algorithm, perform model fitting, and then regularize it in order to make it accurate. You can train a single model or set up model training for a set of experiments like, for example, hyperparameter optimization.

A hyperparameter in ML is a parameter whose value you can use to control the learning process. The values of other parameters, like node weights, are derived via model training.

In the ML model training process, you train the model by feeding datasets. Here, the model learns and will eventually determine how accurately it will function when it is put into an application for end-users later.

Model training seeks to validate how accurately a model works on training data and can reflect the same accuracy when it works on a new dataset unused during model training.

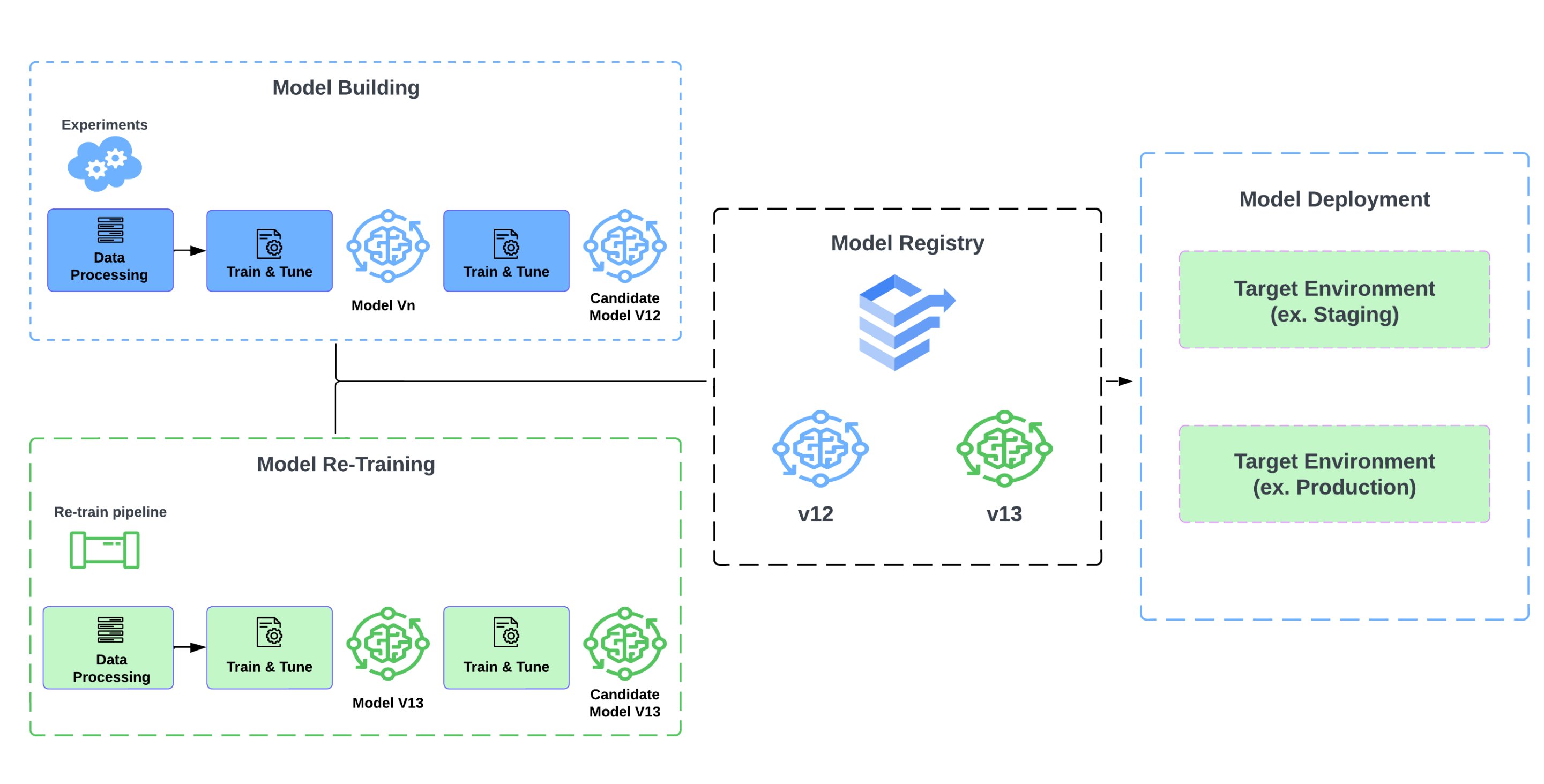

Once the model passes the predetermined metric-based success parameters, the model will then be stored in the central model registry. The registry keeps all metadata about the model like model lineage, parameters, metrics, etc.

Step 3: ML Model Deployment

ML model deployment is the last step.

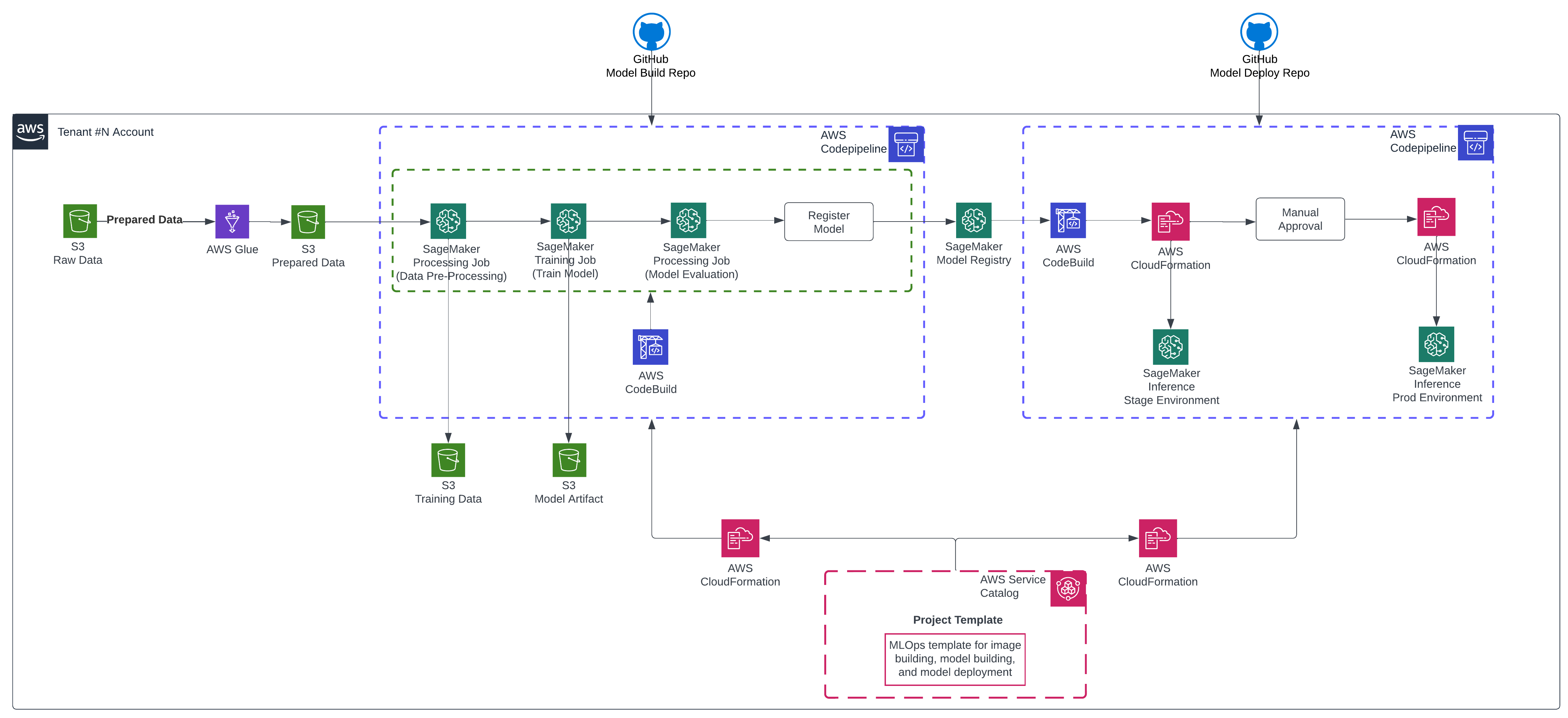

ML model deployment follows the typical stages of various sequential environment steps as in software deployment like staging and production. Multiple quality gates can inject different controls into the deployment pipeline like manual approval and quality gates.

Deploying ML models includes disparate pipelines and workflows with many different steps and dependencies that need to work together to provide the ability to iterate through the ML lifecycle. These pipelines need to provide end-to-end traceability so that you can track the models’ training, metrics, and deployment.

Essential DevOps Practices

Building an ML application and deploying it involves various steps. All these steps and tasks that we have just discussed can be challenging, and it is even more challenging when we apply DevOps practices like using source code with version control for every step in the CI/CD pipeline.

Versioning source code is a standard practice in software development and DevOps. In machine learning, data is the differentiator. Data sets need tracking and versioning as the use is one of input across the machine-learning workflow. The model training results need comprehensive pipeline tracking in an end-to-end manner.

In ML, it is essential to transform data and design features so that the data is machine-readable.

This process is known as feature engineering and its quality influences model predictability. Data scientists and developers spend over 60% of their time designing and creating features. Repetitive feature engineering work is needed to produce new features.

Amazon SageMaker Feature Store is a great option that offers a fully managed central repository for ML features. SageMaker is secure and makes it easy to store and retrieve features while also saving a lot of time.

SageMaker Data Wrangler is another tool that can automatically build, train, and tune ML models and provide a unified experience in data preparation and ML model training.

There are various components needed to build end-to-end pipelines. CI/CD tools and current integrations are not built purposefully for machine learning. It is advisable that one use a tool that is built purposely for ML (for data preparation and model training) and has a CI/CD orchestration layer for implementing CI/CD practices.

There is no easy way to work with a single and easy pipeline for DevOps or MLOps, for that matter. The challenges lie in technical implementation, organizational structure, different integration requirements, regulatory requirements, etc.

This is the reason why DevOps practices for machine learning are essential. We can standardize and productionize the steps and MLOps platforms like AWS Sagemaker are moving in the right direction for integrating DevOps best practices.

Customer Journey in ML Automation

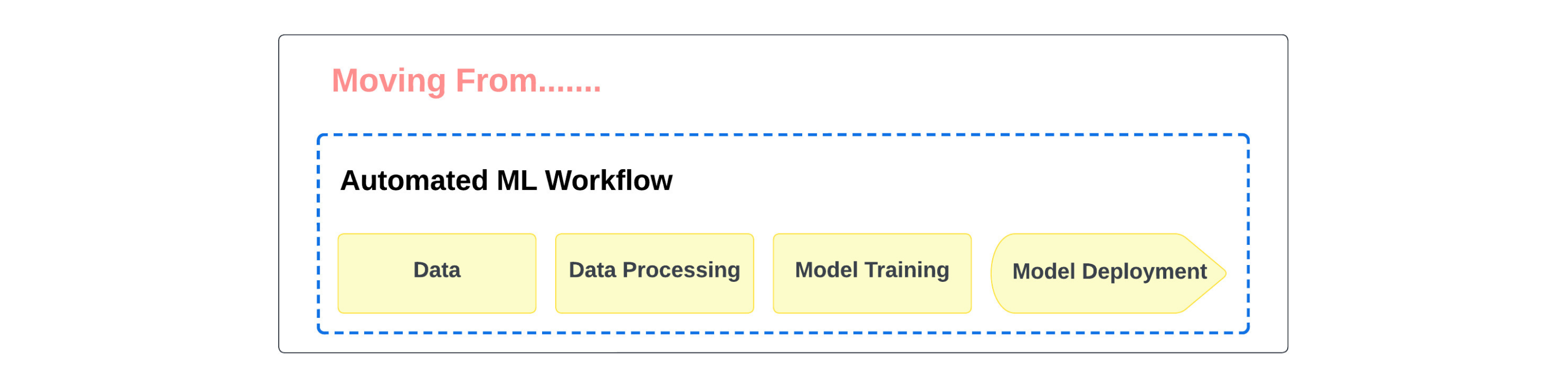

Building and deploying an ML application involves various steps like accessing and preparing data, developing features and training models, making production data pipelines, and deploying the model, followed by further monitoring configurations.

Customers usually initiate task automation within the ML workflow by applying some level of orchestration to determine the sequential execution of tasks in a pipeline.

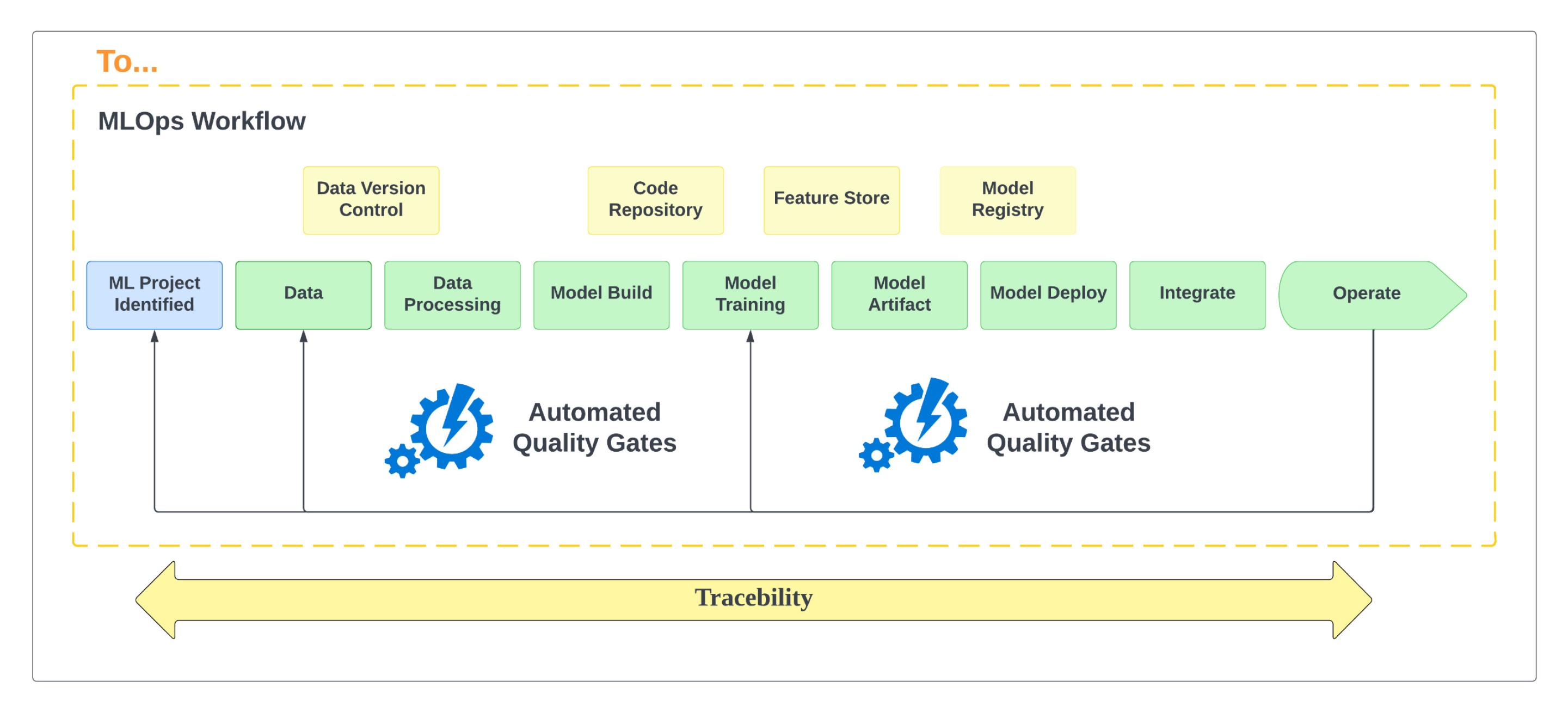

ML workflow incorporates various MLOps practices like:

- Code Repository

- Data Version Control

- Feature Store (for sharing and discovering curated features)

- Model artifact versioning (managing model version at scale and establishing traceability)

The MLOps Workflow has two distinct phases – model building activities and model deployment activities. These two phases have different personas, so the idea is to establish as much automation between those steps, orchestrate this automation, and develop automated quality gates.

DevOps practices include source code, versioning, automated model building and deployment, and Quality Gates, which are necessary for MLOps.

MLOps Accelerator

Built on the Amazon Sagemaker Pipelines service, the MLOps Accelerator is a new capability of Amazon Sagemaker that makes it easy for data scientists and engineers to build, automate, and scale end-to-end machine learning pipelines.

Exciting features like MLOps templates automatically provision the underlying resources needed to enable CI/CD capabilities for your ML development lifecycle. You can learn about the MLOps accelerator.

Wrapping Everything Up

Adopting a DevOps approach benefits ML projects through MLOps and is purpose-built for handling data-driven projects.

Moreover, tools like Amazon SageMaker Feature Store and others make it faster, more secure, and more efficient to transform data and speed up machine learning projects. Organizations must adopt these tools to gain a competitive advantage early.

Sign up for a free consultation for MLOps consulting at Neurons Lab so we can guide you to a customized solution that delivers results.